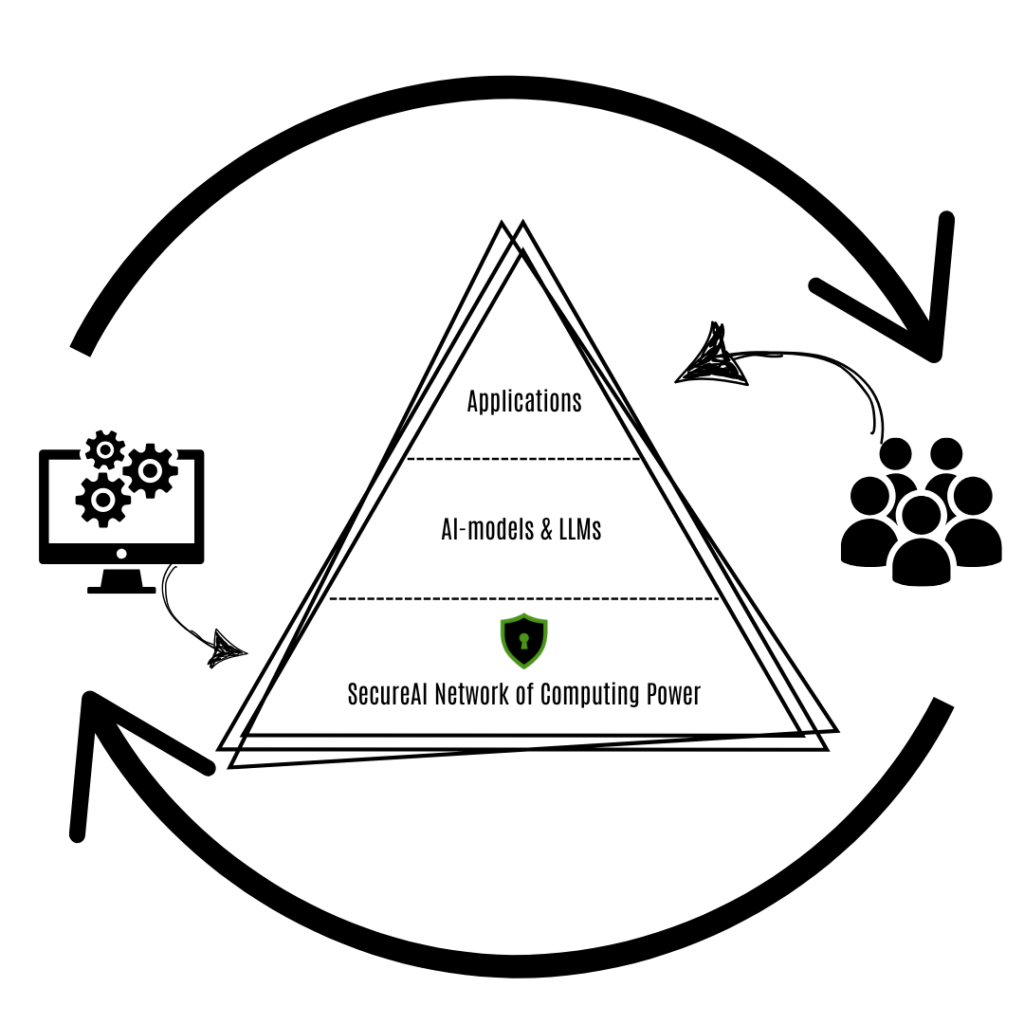

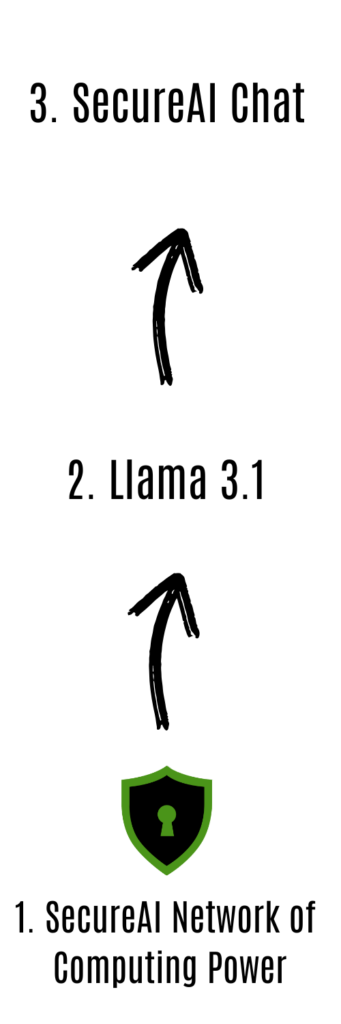

At SecureAI, we are developing a decentralized network of computers designed to run powerful AI models safely and make them accessible through simple applications. Here’s an overview of how our system works:

At the very foundation, we have the SecureAI Network—the engine that powers everything. It’s made up of many computers working together in a decentralized way, which means no one involved can access the complete data. This ensures privacy is always protected, and everything remains secure and reliable. Privacy isn’t just a feature—it’s built into how our network functions from the ground up.

The second layer is where the AI Models come into play. We use open source models from well known developers such as meta. These models, like large language models (LLMs), are the “brains” behind the AI—trained to understand and generate language, make predictions, or even create visuals. But on their own, AI models don’t have a user interface. They’re like a powerful engine without a steering wheel or dashboard. They can do many incredible things, but they need a way to connect to people in a useful manner. In this layer, we run these (multiple) open-source models securely on our network to provide their capabilities, but they’re still just raw intelligence at this point.

That’s where User-Friendly Applications—the third, top layer—come in. These applications are built on top of the AI models, and they give you an easy and efficient way to use the AI’s capabilities. Think of the applications as the tools that make the AI accessible: they provide an interface—something you can click, type, and interact with. For instance, we use the LLMs to create chat applications, like the one in our demo, or tools to generate images. The applications take the raw power of AI models and present it in a way that’s simple and useful for you. The applications can be general or specialized – like a ‘general chat’ or ‘rug pull detector for crypto projects’. The use case is determent by the design of the application.

Here’s how our AI-powered demo chat works—using an analogy you might remember from the days of peer-to-peer downloads.

When you start a chat, our SecureAI Network powers your request. Think back to when people used peer-to-peer networks to download music or movies. You never knew exactly who you were downloading from—it came from many different sources at once, keeping it anonymous. SecureAI works the same way: your request is handled by multiple computers in our network. No single computer has all your data, ensuring privacy and security from the very beginning.

The Open-Source AI Model running on the network then takes over, in this case Llama 3.1. This is the “brain” that processes your input and generates a response. Just like the peer-to-peer network doesn’t store your downloads forever, our AI model also doesn’t remember anything beyond your current session. Everything is temporary—just long enough to answer your questions during the session.

Finally, the User-Friendly Application delivers the response back to you through the chat interface—making complex AI easy to use. Once your chat session ends, all the data is wiped out completely. Just like the old downloads disappearing after use, we don’t keep anything. Plus, network participants earn SECAI tokens for providing the computing power, and users pay with those tokens—creating a fair, decentralized system for everyone involved. No payment is involved in the Chat demo – as it is a demo.